When I started programming properly in 2012 during my degree, there were a few truths. C# and Visual Studio were for Windows. Python and Perl were for Unix and Mac OS was something I just didn’t want to ever touch.

Visual Studio also cost a bomb – I only had a copy because my University was kind enough to furnish me with a 2 year licence for VS2010 which I used in full, then just before my account was suspended managed to nab a copy of VS2013 which carried me til 2016. I tried making a few cross platform apps in the beginning, but unless I was using Mono or something far more basic like JavaScript – then cross platform wasn’t really a thing.

Lo and behold Microsoft go and change up their style – they’re now shipping free version of Visual Studio, and not only that but the community editions are actually quite powerful (this might have always been the case – but since I had free professional editions I didn’t look too hard). Either way I’m impressed with the level of features available in the community editions – Especially with it being free. Then a few months later one of my co-workers Rogue Planetoid mentioned that Microsoft were releasing the .Net Core standard – a cross platform SDK for visual studio, capable of being run on Unix, Mac and still natively on windows.

The framework

This might be old tech as of writing this as the .Net Core 2 standard is released, and I never bothered to give 1 or 1.1 a go – but I finally did get round to upgrading VS2017 Community and getting the SDK from the Microsoft site. I won’t go into what it was I was working on because frankly that’s a bit of a lengthy conversation [My GitHub for the project], but it was effectively a console application. At the moment .Net Core 2 supports ASP Net websites and Console Applications. So unfortunately my bizarre love for windows forms isn’t yet supported. But I was keen to get my console app running on my Centos server.

First of all you can’t change an existing application over to a .Net Core app – or if there is I couldn’t see the option. So I had to create a new project and then port over my code. Thankfully this provided an excellent excuse to refactor my code. I did particularly enjoy that the code, for lack of a better term, just worked. I didn’t have any 3rd party NuGet packages or extra content, so the basic windows libraries could just be bolted on and the code compiled as normal. Within about 20 minutes I had completely ported over my applications, an hour after that I’d made it a little prettier.

Since I was finally moving my code over to the same server as the database I decided to remove the API calls and use a direct MySQL connector – now this meant that I did have to get a NuGet Package – specifically MySQL.Data this currently supports the standard .Net framework but it isn’t supported on .Net Core yet unless you get the RC or DMR version. I installed that, did some upgrades and compiled the app.

Setup on the Unix server

So – running it on Centos; I initially went and downloaded the 64 bit runtime binaries from the Microsoft blog local to my server, I then unzipped them and followed the generic instructions. Microsoft instructions tell you to unzip them and leave in your home directory for use but I wanted to put them in more of an application directory, so I did the following.

cd ~/ mkdir dotnet cd ./dotnet wget https://download.microsoft.com/download/5/F/0/5F0362BD-7D0A-4A9D-9BF9-022C6B15B04D/dotnet-runtime-2.0.0-linux-x64.tar.gz tar zxvf dotnet-runtime-2.0.0-linux-x64.tar.gz cd ../ mv ./dotnet /etc/

This then meant my .Net Core directory was at /etc/dotnet/… and I now needed to register the new application. Microsoft tells you to execute this in your command line but I found that each time you restarted your shell session it would forget what you’d set up, so in the end I added it to my local .bashrc file.

nano ~/.bashrc #then at the bottom of the file added export PATH=$PATH:/etc/dotnet

Save and now I could run any dotnet applications with the command dotnet such as “dotnet -h”

I did have some trouble on my first application run due to some missing libraries, but they were pretty easy to install through the usual package manager

yum install libicu libunwind

Package & Run my App

So I’m used to a console application building and dumping an executable in the output directory with an App Config and some, .Net Core uses JSON files and DLL’s for it’s binaries, though they shouldn’t be treat any different really, the main difference to factor in is that your unix installation doesn’t have a GAC – the GAC is the global assembly cache; when you run an application on windows, normally if the code references a DLL it’ll ask the GAC where the install path is, so it can be referenced and used as normal, even if that DLL hasn’t been shipped with the application.

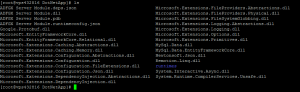

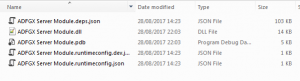

Unix obviously doesn’t have a GAC – so when you try to run your application you need to make sure that instead of just moving your JSON and DLL files up to the server, you actually publish the application and move everything. To show you what I mean, below is the difference between the standard “Build” output of a .Net Core application vs the “Publish” output of the application.

The publish job packages up everything, including runtimes and referenced libraries, so in order for this to run on Unix, I needed to publish the application and move that output onto the server. Once it was on the server I could get away with just moving my main DLL up, but you must publish at least once or you may start to get runtime errors.

Once it’s all on your server, let it run.

dotnet ./ADFGX\ Server\ Module.dll

or if you want it to run in a background session kick it off with a screen

screen -dmS DotNetApp dotnet ./ADFGX\ Server\ Module.dll screen -x DotNetApp

Conclusion

All in all I’m very pleased with the .Net core stuff, it’s downsized the number of IDE’s I need to have installed and means I can now start hosting some more windows technologies on my Unix server which should save me a few pennies as well.

Hopefully in the coming months we see Microsoft bringing out some more application types and looking forward to more NuGet support. But what I’ve seen so far of .Net Core seems really stable, very easy to set up and really easy to migrate your existing stuff over to.

Recent Comments